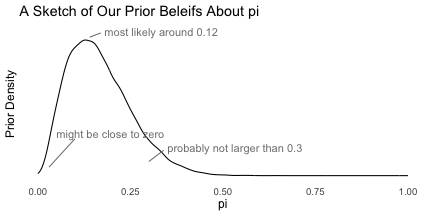

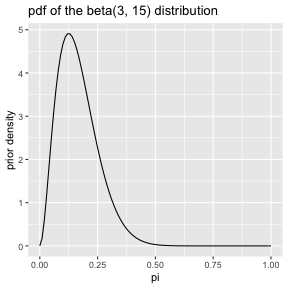

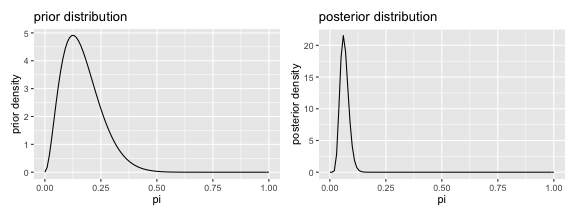

class: center, middle, inverse, title-slide .title[ # Point Estimates ] .date[ ### updated: 2022-11-14 ] --- class: middle layout: true --- class: center ### King, Keohane, and Verba (1996, p. 46) define *inference* as "the process of using the facts we know to learn about facts we do not know." Consider the following three targets of inference: --- ## The Average Treatment Effect - Suppose you conduct an experiment in which you assign `\(N\)` subjects to either treatment or control. - For each subject `\(n\)`, you observe either the outcome under the treatment condition `\(Y^{T}_n\)` or the outcome under control `\(Y^{C}_n\)`. - Define the *average treatment effect* or *ATE* as `\(\displaystyle \frac{1}{N}\sum_{n = 1}^{N}\left( Y^{T}_n - Y^{C}_n \right)\)`. - Because we cannot place subject `\(n\)` in both treatment and control, we cannot observe the ATE; we can only estimate it. --- ## Features of a Population, Using a Sample - Suppose we have a random sample of `\(N\)` observations from a much larger population. - We can use the sample to estimate features of the population, such as the average of a variable or correlation between two variables. - Because we cannot (or perhaps choose to not) observe the each member of population, we cannot observe the features of the population directly; we can only estimate it. --- ## Parameters of a Stochastic Model - Suppose the outcome variable `\(y\)` is a collection of samples from a distribution `\(f(\theta)\)`. - We cannot observe `\(\theta\)` directly, but we can use the observed samples `\(y\)` to learn estimate `\(\theta\)`. - Example, suppose you have a binary outcome variable `\(y\)` that you model as draws from a Bernoulli distribution. Then `\(y_i \sim \text{Bernoulli}(\pi)\)`. Your inferential target would not be the proportion of ones in the sample, but the value of `\(\pi\)`. --- Modeling these three targets of inference identical in some situations and very similar in many. **I focus on estimating the parameters of a stochastic model.** In this situation, we observe a sample `\(y\)` from a particular distribution and use the sample to estimate the parameters of the distribution. --- We consider two types of estimates: 1. *Point Estimates*: using the observed data to calculate a *single value* or best-guess for the unobservable quantity of interest. 1. *Interval Estimates*: using the observed data to calculate a *range of values* for the unobservable quantity of interest. For each type of estimate, we consider: 1. How to *develop* an estimator. 1. How to *evaluate* an estimator. --- # Three Types Today, we'll discuss three different types of point estimates 1. Bayesian Point Estimates 1. Method of Moments 1. Maximum Likelihood --- class: middle # Bayesian Point Estimates Bayesian inference follows a simple recipe: 1. Choose a distribution for the data. 1. Choose a distribution to describe your prior beliefs. 1. Update the prior distribution upon observing the data by computing the posterior distribution. 1. Summarize the **location** of the posterior distribution. --- # Mechanics of Bayesian Inference Suppose a random sample from a distribution `\(f(x; \theta)\)` that depends on the unknown parameter `\(\theta\)`. Bayesian inference models our *beliefs* about the unknown parameter `\(\theta\)` as a distribution It answers the question: what should we believe about `\(\theta\)`, given the observed samples `\(x = \{x_1, x_2, ..., x_n\}\)` from `\(f(x; \theta)\)`? These beliefs are simply the conditional distribution `\(f(\theta \mid x)\)`. By Bayes' rule, `\(\displaystyle f(\theta \mid x) = \frac{f(x \mid \theta)f(\theta)}{f(x)} = \frac{f(x \mid \theta)f(\theta)}{\displaystyle \int_{-\infty}^\infty f(x \mid \theta)f(\theta) d\theta}\)`. `\(\displaystyle \underbrace{f(\theta \mid x)}_{\text{posterior}} = \frac{\overbrace{f(x \mid \theta)}^{\text{likelihood}} \times \overbrace{f(\theta)}^{\text{prior}}}{\displaystyle \underbrace{\int_{-\infty}^\infty f(x \mid \theta)f(\theta) d\theta}_{\text{normalizing constant}}}\)` --- # Mechanics of Bayesian Inference There are four parts to a Bayesian analysis: `\(\displaystyle \underbrace{f(\theta \mid x)}_{\text{posterior}} = \frac{\overbrace{f(x \mid \theta)}^{\text{likelihood}} \times \overbrace{f(\theta)}^{\text{prior}}}{\displaystyle \underbrace{\int_{-\infty}^\infty f(x \mid \theta)f(\theta) d\theta}_{\text{normalizing constant}}}\)` 1. `\(f(\theta \mid x)\)`. "The posterior;" what we're trying to find. This distribution models our beliefs about parameter `\(\theta\)` given the data `\(x\)`. 1. `\(f(x \mid \theta)\)`. "The likelihood." This distribution model conditional density/probability of the data `\(x\)` given the parameter `\(\theta\)`. We need to invert the conditioning in order to find the posterior. 1. `\(f(\theta)\)`. "The prior;" our beliefs about `\(\theta\)` prior to observing the sample `\(x\)`. 1. `\(f(x) =\int_{-\infty}^\infty f(x \mid \theta)f(\theta) d\theta\)`. A normalizing constant. Recall that the role of the normalizing constant is to force the distribution to integrate or sum to one. Therefore, we can safely ignore this constant until the end, and then find proper normalizing constant. It's convenient to choose a **conjugate** prior distribution that, when combined with the likelihood, produces a posterior from the same family. --- # The Toothpaste Cap Problem As a running example, we use the **toothpaste cap problem**: > We have a toothpaste cap--one with a wide bottom and a narrow top. We're going to toss the toothpaste cap. It can either end up lying on its side, its (wide) bottom, or its (narrow) top. > We want to estimate the probability of the toothpaste cap landing on its top. > We can model each toss as a Bernoulli trial, thinking of each toss as a random variable `\(X\)` where `\(X \sim \text{Bernoulli}(\pi)\)`. If the cap lands on its top, we think of the outcome as 1. If not, as 0. > Suppose we toss the cap `\(N\)` times and observe `\(k\)` tops. What is the posterior distribution of `\(\pi\)`? --- # The Likelihood (The Boring Part) According to the model `\(f(x_i \mid \pi) = \pi^{x_i} (1 - \pi)^{(1 - x_i)}\)`. Because the samples are iid, we can find the *joint* distribution `\(f(x) = f(x_1) \times ... \times f(x_N) = \prod_{i = 1}^N f(x_i)\)`. We're just multiplying `\(k\)` `\(\pi\)`s (i.e., each of the `\(k\)` ones has probability `\(\pi\)`) and `\((N - k)\)` `\((1 - \pi)\)`s (i.e., each of the `\(N - k\)` zeros has probability `\(1 - \pi\)`), so that the `\(f(x | \pi) = \pi^{k} (1 - \pi)^{(N - k)}\)`. $$ \text{the likelihood: } f(x | \pi) = \pi^{k} (1 - \pi)^{(N - k)}, \text{where } k = \sum_{n = 1}^N x_n \\ $$ --- # The Prior (The Awkward Part) The prior describes your beliefs about `\(\pi\)` *before* observing the data. Here are some questions that we might ask ourselves the following questions: 1. What's the most likely value of `\(\pi\)`? 1. Are our beliefs best summarizes by a distribution that's skewed to the left or right? *To the right.* 1. `\(\pi\)` is about _____, give or take _____ or so. 1. There's a 25% chance that `\(\pi\)` is less than ____. 1. There's a 25% chance that `\(\pi\)` is greater than ____. --- # The Prior (The Awkward Part) The prior describes your beliefs about `\(\pi\)` *before* observing the data. Here are some questions that we might ask ourselves the following questions: 1. What's the most likely value of `\(\pi\)`? *Perhaps 0.15.* 1. Are our beliefs best summarizes by a distribution that's skewed to the left or right? *To the right.* 1. `\(\pi\)` is about _____, give or take _____ or so. *Perhaps 0.17 and 0.10.* 1. There's a 25% chance that `\(\pi\)` is less than ____. *Perhaps 0.05.* 1. There's a 25% chance that `\(\pi\)` is greater than ____. *Perhaps 0.20*. --- # The Prior (The Awkward Part) Given these answers, we can sketch the pdf of the prior distribution for `\(\pi\)`. <!-- --> --- # The Prior (The Awkward Part) We need to find a density function that matches these prior beliefs. For the Bernoulli model, the *beta distribution* is the conjugate prior. (The beta prior produces a beta posterior.) While a conjugate prior is not crucial in general, it makes the math much more tractable. --- # The Prior (The Awkward Part) So then what beta distribution captures our prior beliefs? See the code snippet linked in today's HW assignment. Go find *your* prior density. --- # The Prior (The Awkward Part) I find that setting the parameters `\(\alpha\)` and `\(\beta\)` of the beta distribution to 3 and 15, respectively, captures my prior beliefs about the probability of getting a top. <!-- --> The pdf of the beta distribution is `\(f(x) = \frac{1}{B(\alpha, \beta)} x^{\alpha - 1}(1 - x)^{\beta - 1}\)`. Remember that `\(B()\)` is the beta function, so `\(\frac{1}{B(\alpha, \beta)}\)` is a constant. --- # The Prior (The Awkward Part) Let's denote our chosen values of `\(\alpha = 3\)` and `\(\beta = 15\)` as `\(\alpha^*\)` and `\(\beta^*\)`. As we see in a moment, it's convenient distinguish the parameters in the prior distribution from other parameters. `\(f(\pi) = \frac{1}{B(\alpha^*, \beta^*)} \pi^{\alpha^* - 1}(1 - \pi)^{\beta^* - 1}\)` --- # The Posterior (The Fun Part) We need to compute the posterior by multiplying the likelihood times the prior (and then finding the normalizing constant). `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\overbrace{f(x \mid \pi)}^{\text{likelihood}} \times \overbrace{f(\pi)}^{\text{prior}}}{\displaystyle \underbrace{\int_{-\infty}^\infty f(x \mid \pi)f(\pi) d\pi}_{\text{normalizing constant}}}\)` We plug in the likelihood, plug in the prior, and denote the normalizing constant as `\(C_1\)` to remind ourselves that it's just a constant. `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\overbrace{\left[ \pi^{k} (1 - \pi)^{(N - k) }\right] }^{\text{likelihood}} \times \overbrace{ \left[ \frac{1}{B(\alpha^*, \beta^*)} \pi^{\alpha^* - 1}(1 - \pi)^{\beta^* - 1} \right] }^{\text{prior}}}{\displaystyle \underbrace{C_1}_{\text{normalizing constant}}}\)` --- # The Posterior (The Fun Part) `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\overbrace{\left[ \pi^{k} (1 - \pi)^{(N - k) }\right] }^{\text{likelihood}} \times \overbrace{ \left[ \frac{1}{B(\alpha^*, \beta^*)} \pi^{\alpha^* - 1}(1 - \pi)^{\beta^* - 1} \right] }^{\text{prior}}}{\displaystyle \underbrace{C_1}_{\text{normalizing constant}}}\)` We need to simplify the right-hand side. First, notice that the term `\(\frac{1}{B(\alpha^*, \beta^*)}\)` in the numerator is just a constant. We can incorporate that constant term with `\(C_1\)` by multiplying top and bottom by `\(B(\alpha^*, \beta^*)\)` and letting `\(C_2 = C_1 \times B(\alpha^*, \beta^*)\)`. `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\overbrace{\left[ \pi^{k} (1 - \pi)^{(N - k) }\right] }^{\text{likelihood}} \times \overbrace{ \left[ \pi^{\alpha^* - 1}(1 - \pi)^{\beta^* - 1} \right] }^{\text{kernel of former prior}} }{\displaystyle \underbrace{C_2}_{\text{new normalizing constant}}}\)` --- # The Posterior (The Fun Part) `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\overbrace{\left[ \pi^{k} (1 - \pi)^{(N - k) }\right] }^{\text{likelihood}} \times \overbrace{ \left[ \pi^{\alpha^* - 1}(1 - \pi)^{\beta^* - 1} \right] }^{\text{kernel of former prior}} }{\displaystyle \underbrace{C_2}_{\text{new normalizing constant}}}\)` Now we can collect the exponents with base `\(\pi\)` and the exponents with base `\((1 - \pi)\)`. `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\left[ \pi^{k} \times \pi^{\alpha^* - 1} \right] \times \left[ (1 - \pi)^{(N - k) } \times (1 - \pi)^{\beta^* - 1} \right] }{ C_2}\)` --- # The Posterior (The Fun Part) `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\left[ \pi^{k} \times \pi^{\alpha^* - 1} \right] \times \left[ (1 - \pi)^{(N - k) } \times (1 - \pi)^{\beta^* - 1} \right] }{ C_2}\)` Recalling that `\(x^a \times x^b = x^{a + b}\)`, we combine the powers. `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\left[ \pi^{(\alpha^* + k) - 1} \right] \times \left[ (1 - \pi)^{[\beta^* + (N - k)] - 1} \right] }{ C_2}\)` --- # The Posterior (The Fun Part) `\(\displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} = \frac{\left[ \pi^{(\alpha^* + k) - 1} \right] \times \left[ (1 - \pi)^{[\beta^* + (N - k)] - 1} \right] }{ C_2}\)` Except it might not (doesn't, in this case) integrate to one. We just need to find `\(C_2\)` so that it does integrate to one. Because we're clever, we notice that this is *almost* a beta distribution with `\(\alpha = (\alpha^* + k)\)` and `\(\beta = [\beta^* + (N - k)]\)`. If `\(C_2 = B(\alpha^* + k, \beta^* + (N - k))\)`, then it *would* integrate to one, because the the posterior is *exactly* a `\(\text{beta}(\alpha^* + k, \beta^* + [N - k]))\)` distribution. --- # The Posterior (The Fun Part) This is completely expected. We chose a beta distribution for the prior because it would give us a beta posterior distribution. For simplicity, we can denote the parameter for the beta posterior as `\(\alpha^\prime\)` and `\(\beta^\prime\)`, so that `\(\alpha^\prime = \alpha^* + k\)` and `\(\beta^\prime = \beta^* + [N - k]\)` $$ `\begin{aligned} \displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} &= \frac{ \pi^{\overbrace{(\alpha^* + k)}^{\alpha^\prime} - 1} \times (1 - \pi)^{\overbrace{[\beta^* + (N - k)]}^{\beta^\prime} - 1} }{ B(\alpha^* + k, \beta^* + [N - k])} \\ &= \frac{ \pi^{\alpha^\prime - 1} \times (1 - \pi)^{\beta^\prime - 1} }{ B(\alpha^\prime, \beta^\prime)}, \text{where } \alpha^\prime = \alpha^* + k \text{ and } \beta^\prime = \beta^* + [N - k] \end{aligned}` $$ --- # The Posterior (The Fun Part) $$ `\begin{aligned} \displaystyle \underbrace{f(\pi \mid x)}_{\text{posterior}} &= \frac{ \pi^{\overbrace{(\alpha^* + k)}^{\alpha^\prime} - 1} \times (1 - \pi)^{\overbrace{[\beta^* + (N - k)]}^{\beta^\prime} - 1} }{ B(\alpha^* + k, \beta^* + [N - k])} \\ &= \frac{ \pi^{\alpha^\prime - 1} \times (1 - \pi)^{\beta^\prime - 1} }{ B(\alpha^\prime, \beta^\prime)}, \text{where } \alpha^\prime = \alpha^* + k \text{ and } \beta^\prime = \beta^* + [N - k] \end{aligned}` $$ This is an elegant, simple solution. To obtain the parameters for the beta posterior distribution, we just... - add the number of tops (Bernoulli successes) to the prior value for `\(\alpha\)` - add the number of not-tops (sides and bottoms; Bernoulli failures) to the prior value for `\(\beta\)`. --- # The Posterior (The Fun Part) Suppose that I tossed the toothpaste cap 150 times and got 8 tops. <!-- --> --- # Method of Moments Suppose a random variable `\(X\)`. Then we refer to `\(E(X^k)\)` as the `\(k\)`-th **moment** of the distribution or population. Similarly, we refer to `\(\text{avg}(x^k)\)` as the `\(k\)`-th **sample moment**. For example, recall that `\(V(X) = E \left(X^2 \right) - \left[ E(X)\right]^2\)`. In example the variance of `\(X\)` is the difference between the second moment and the square of the first moment. --- # Method of Moments Recall that the law of large numbers guarantees that `\(\text{avg}(x) \xrightarrow[]{p} E(X)\)`. Thus, the first sample moment (the average) converges in probability to the first moment of `\(f\)` (the expected value or mean). By the law of the unconscious statistician, we can similarly guarantee that `\(\text{avg}(x^k) \xrightarrow[]{p} E(X^k)\)`. Thus, the sample moments converge in distribution to moments of `\(f\)`. --- # Method of Moments Now suppose that `\(f\)` has parameters `\(\theta_1, \theta_2, ..., \theta_k\)` so that `\(X \sim f(\theta_1, \theta_2, ..., \theta_k)\)`. We know (or can solve) for the moments of `\(f\)` so that `\(E(X^1) = g_1(\theta_1, \theta_2, ..., \theta_k)\)`, `\(E(X^2) = g_2(\theta_1, \theta_2, ..., \theta_k)\)`, and so on. To use the method of moments, set the first `\(k\)` sample moments equal to the first `\(k\)` moments of `\(f\)` and relabel `\(\theta_i\)` as `\(\hat{\theta}_i\)`. Solve the system of equations for each `\(\hat{\theta}_i\)`. --- # Example: Gamma Distribution Suppose we have `\(N\)` samples with replacement `\(x_n\)` for `\(n \in \{1, 2, ..., N\}\)` from a `\(\text{gamma}(\alpha, \beta)\)` distribution. Take a second to understand the gamma distribution. (Wikipedia is great!) --- # Example: Gamma Distribution We can use the method of moments to derive estimators of `\(\alpha\)` and `\(\beta\)`. Since we have two parameters, we set the first two sample moments equal to the first two moments of the gamma distribution, add hats, and solve. For the gamma distribution: `\(E(X) = \frac{\alpha}{\beta}\)` `\(E(X^2) = V(X) + E(X)^2 = \frac{\alpha}{\beta^2} + \left( \frac{\alpha}{\beta} \right)^2 = \frac{\alpha(\alpha + 1)}{\beta^2}\)`. --- # Example: Gamma Distribution Moments of the gamma distribution: $$ `\begin{aligned} \displaystyle E(X) & = \frac{\alpha}{\beta}\\ \displaystyle E(X^2) & = \frac{\alpha(\alpha + 1)}{\beta^2}\\ \end{aligned}` $$ Set the first two sample moments equal to the first two moments of the gamma distribution and add hats. $$ `\begin{aligned} \displaystyle \frac{1}{N} \sum_{n = 1}^{N} x_n &= \frac{\hat{\alpha}}{\hat{\beta}}\\ \displaystyle \frac{1}{N} \sum_{n = 1}^{N} x_n^2 &= \frac{\hat{\alpha}(\hat{\alpha} + 1)}{\hat{\beta}^2}\\ \end{aligned}` $$ --- # Example: Gamma Distribution $$ `\begin{aligned} \displaystyle \frac{1}{N} \sum_{n = 1}^{N} x_n &= \frac{\hat{\alpha}}{\hat{\beta}}\\ \displaystyle \frac{1}{N} \sum_{n = 1}^{N} x_n^2 &= \frac{\hat{\alpha}(\hat{\alpha} + 1)}{\hat{\beta}^2}\\ \end{aligned}` $$ For simplicity, let `\(m_1 = \frac{1}{N} \sum_{n = 1}^{N} x_n\)` and `\(m_2 = \frac{1}{N} \sum_{n = 1}^{N} x_n^2\)`. Then, solving for `\(\hat{\alpha}\)` and `\(\hat{\beta}\)`, we obtain $$ `\begin{aligned} \displaystyle \hat{\alpha} &= \frac{m_1^2}{m_2 - m_1^2}\text{ and }\\ \displaystyle \hat{\beta} &= \frac{m_1}{m_2 - m_1^2}\text{.}\\ \end{aligned}` $$ --- # Example: Toothpaste Cap Problem 1. Set the first moment of the sample to the first moment of the Bernoulli distribution. 1. Add a hat to the quantities to estimate. 1. Solve. --- # Maximum Likelihood Suppose we have a random sample from a distribution `\(f(x \mid \theta)\)`. We find the maximum likelihood (ML) estimator `\(\hat{\theta}\)` of `\(\theta\)` by maximizing the likelihood of the observed data with respect to `\(\theta\)`. In short, we take the likelihood from earlier and find the parameter `\(\theta\)` that maximizes it. `\(f(x | \pi) = \pi^{k} (1 - \pi)^{(N - k)}, \text{where } k = \sum_{n = 1}^N x_n\)` --- # Maximum Likelihood `\(f(x | \pi) = \pi^{k} (1 - \pi)^{(N - k)}, \text{where } k = \sum_{n = 1}^N x_n\)` In practice, to make the math and/or computation a bit easier, we manipulate the likelihood function in two ways: 1. Relabel the likelihood function `\(f(x \mid \theta) = L(\theta)\)`, since it's weird to maximize with respect to a conditioning variable. 1. Work with `\(\log L(\theta)\)` rather than `\(L(\theta)\)`. Because `\(\log()\)` is a monotonically increasing function, the `\(\theta\)` that maximizes `\(L(\theta)\)` also maximizes `\(\log L(\theta)\)`. --- # Maximum Likelihood Suppose we have **independent** samples `\(x_1, x_2, ..., x_N\)` from `\(f(x \mid \theta)\)`. Then the likelihood is `\(f(x \mid \theta) = \prod_{n = 1}^N f(x_n \mid \theta)\)`. (Why can we just multiply these?) Then `\(\log L(\theta) = \sum_{n = 1}^N \log \left[ f(x_n \mid \theta) \right]\)`. The ML estimator `\(\hat{\theta}\)` of `\(\theta\)` is `\(\arg \max \log L(\theta)\)`. In applied problems, we might be able to simplify `\(\log L\)` substantially. Occasionally, we can find a nice analytical maximum. In many cases, we have a computer find the parameter that maximizes `\(\log L\)`. --- # Example: Toothpaste Cap Problem For the toothpaste cap problem, we have the following likelihood, which I'm borrowing directly from earlier. `\(f(x | \pi) = \pi^{k} (1 - \pi)^{(N - k)}, \text{where } k = \sum_{n = 1}^N x_n\)` Relabel. `\(L(\pi) = \pi^{k} (1 - \pi)^{(N - k)}\)` Take the log and simplify. `\(\log L(\pi) = k \log (\pi) + (N - k) \log(1 - \pi)\)` --- # Example: Toothpaste Cap Problem `\(\log L(\pi) = k \log (\pi) + (N - k) \log(1 - \pi)\)` To find the ML estimator, we find `\(\hat{\pi}\)` that maximizes `\(\log L\)`. In this case, the analytical optimum is easy. $$ `\begin{aligned} \frac{d \log L}{d\hat{\pi}} = y \left( \frac{1}{\hat{\pi}}\right) + (N - y) \left( \frac{1}{1 - \hat{\pi}}\right)(-1) &= 0\\ \frac{y}{\hat{\pi}} - \frac{N - y}{1 - \hat{\pi}} &= 0 \\ \frac{y}{\hat{\pi}} &= \frac{N - y}{1 - \hat{\pi}} \\ y(1 - \hat{\pi}) &= (N - y)\hat{\pi} \\ y - y\hat{\pi} &= N\hat{\pi} - y\hat{\pi} \\ y &= N\hat{\pi} \\ \hat{\pi} &= \frac{y}{N} = \text{avg}(x)\\ \end{aligned}` $$ $$ `\begin{aligned} \hat{\pi} &= \frac{y}{N} = \text{avg}(x)\\ \end{aligned}` $$ The ML estimator for the Bernoulli distribution is the fraction of successes, or, equivalently, the average of the Bernoulli trials. The collected data consist of 150 trials and 8 successes, so the ML estimate of `\(\pi\)` is `\(\frac{8}{150} \approx 0.053\)`. Compare the ML estimate with the posterior mean, median, and mode above. --- # Evaluating Point Estimates Three frequentist criteria: 1. bias 1. consistency 1. MVUE or BUE --- # Bias Imagine repeatedly sampling and computing the estimate `\(\hat{\theta}\)` of the parameter `\(\theta\)` for each sample. In this thought experiment, `\(\hat{\theta}\)` is a random variable. We say that `\(\hat{\theta}\)` is **biased** if `\(E(\hat{\theta}) \neq \theta\)`. We say that `\(\hat{\theta}\)` is **unbiased** if `\(E(\hat{\theta}) = \theta\)`. We say that the **bias** of `\(\hat{\theta}\)` is `\(E(\hat{\theta}) - \theta\)`. --- # Example: The Toothpaste Cap Problem For example, we can compute the bias of our ML estimator of `\(\pi\)` in the toothpaste cap problem. $$ `\begin{aligned} E\left[ \frac{k}{N}\right] &= \frac{1}{N} E(k) = \frac{1}{N} E \overbrace{ \left( \sum_{n = 1}^N x_n \right) }^{\text{recall } k = \sum_{n = 1}^N x_n } = \frac{1}{N} \sum_{n = 1}^N E(x_n) = \frac{1}{N} \sum_{n = 1}^N \pi = \frac{1}{N}N\pi \\ &= \pi \end{aligned}` $$ Thus, `\(\hat{\pi}^{ML}\)` is an unbiased estimator of `\(\pi\)` in the toothpaste cap problem. --- # Example: The Toothpaste Cap Problem What about the posterior mean*? Is it biased? $$ `\begin{aligned} E\left[ \frac{\alpha^* + k}{\alpha^* + \beta^* + N}\right] &= \frac{1}{\alpha^* + \beta^* + N} E(k + \alpha^*) = \frac{1}{\alpha^* + \beta^* + N} \left[ E(k) + \alpha^* \right] \\ & = \frac{1}{\alpha^* + \beta^* + N} \left[ \sum_{n = 1}^N E(x_n) + \alpha^* \right] \\ & = \frac{1}{\alpha^* + \beta^* + N} \left[ \sum_{n = 1}^N \pi + \alpha^* \right] \\ & = \frac{N\pi + \alpha^*}{\alpha^* + \beta^* + N} \end{aligned}` $$ --- # Consistency Imagine take a sample of size N and compute the estimate `\(\hat{\theta}_N\)` of the parameter `\(\theta\)`. We say that `\(\hat{\theta}\)` is a **consistent** estimator of `\(\theta\)` if `\(\hat{\theta}\)` converges in probability to `\(\theta\)`. Intuitively, this means the following: 1. For a large enough sample, the estimator returns the exact right answer. 1. For a large enough sample, the estimate `\(\hat{theta}\)` does not vary any more, but collapses onto a single point and that point is `\(\theta\)`. --- # Consistency Under weak, but somewhat technical, assumptions that usually hold, ML estimators are consistent. Under even weaker assumptions, MM estimators are consistent. --- # Consistency Given that we always have finite samples, why is consistency valuable? --- # Consistency It does not follow that consistent estimators work well in small samples. Consider the estimator `\(\hat{\pi}^{Bayes}\)`. By appropriate (i.e., large) values for `\(\alpha^*\)` and `\(\beta^*\)`, we can make the `\(E(\hat{\pi}^{Bayes})\)` whatever we like in the `\((0, 1)\)` interval. But `\(E(\hat{\pi}^{Bayes})\)` is consistent *regardless* of the values we choose for `\(\alpha^*\)` and `\(\beta^*\)`. Even though posterior mean is consistent, it can be **highly biased** for finite samples. However, as a rough guideline, consistent estimators work well for small samples. However, whether they actually work well in any particular situation needs a more careful investigation. --- # MVUE Imagine repeatedly sampling and computing the estimate `\(\hat{\theta}\)` of the parameter `\(\theta\)` for each sample. In this thought experiment, `\(\hat{\theta}\)` is a random variable. We refer to `\(E \left[ (\hat{\theta} - \theta)^2 \right]\)` as the **mean-squared error* (MSE) of `\(\hat{\theta}\)`. Some people use the square-root of the MSE, which we refer to as the root-mean-squared error (RMSE). In general, we prefer estimators with a smaller MSE to estimators with a larger MSE. --- # MVUE Notice that an estimator can have a larger MSE because (1) it's more variable or (2) more biased. To see this, we can decompose the MSE into two components. $$ `\begin{aligned} E \left[ (\hat{\theta} - \theta)^2 \right] &= E \left[ (\hat{\theta} - E(\hat{\theta})\right] ^2 + E(\hat{\theta} - \theta)^2\\ \text{MSE}(\hat{\theta}) &= \text{Var}(\hat{\theta}) + \text{Bias}(\hat{\theta})^2 \end{aligned}` $$ --- # MVUE When designing an estimator, we usually follow this process: 1. Eliminate biased estimators, if an unbiased estimator exists. 1. Among the remaining unbiased estimators, select the one with the smallest variance. (The variance equals the MSE for unbiased estimators.) This process does not necessarily result in the estimator with the smallest MSE, but it does give us the estimator with the smallest MSE *among the unbiased estimators.* This seems tricky---how do we know we've got the estimator with the smallest MSE among the unbiased estimator? Couldn't there always be another, better unbiased estimator that we haven't considered? It turns out that we have a theoretical lower bound on the variance of an estimator. No unbiased estimator can have a variance below the **Cramér-Rao Lower Bound**. If an unbiased estimator equals the Cramér-Rao Lower Bound, then we say that estimator **attains** the Cramér-Rao Lower Bound. We refer to an estimator that attains the Cramér-Rao Lower Bound as the **minimum-variance unbiased estimator** (MVUE) or the **best unbiased estimator** (BUE). --- # MVUE A MVUE estimator is the gold standard. It is possible, though, that a *biased* alternative estimator has a smaller MSE. (e.g., the posterior mean with the right prior distribution) It's beyond our scope to establish whether particular estimators are the MVUE. However, in general, the *sample average* is an MVUE of the expected value of a distribution. Alternatively, the sample average is the MVUE of the population average. If you are using `\(\hat{\theta} = \text{avg}(x)\)` to estimate `\(\theta = E(X)\)`, then `\(\hat{\theta}\)` is an MVUE.